There is a growing, and entirely reasonable, concern regarding security and privacy in the era of Artificial Intelligence. Because AI relies on massive datasets to function, users are rightfully asking: "Where is my data going, and who is using it?" To understand how we protect your information, it is helpful to distinguish between two different phases of AI: Training and Inference.

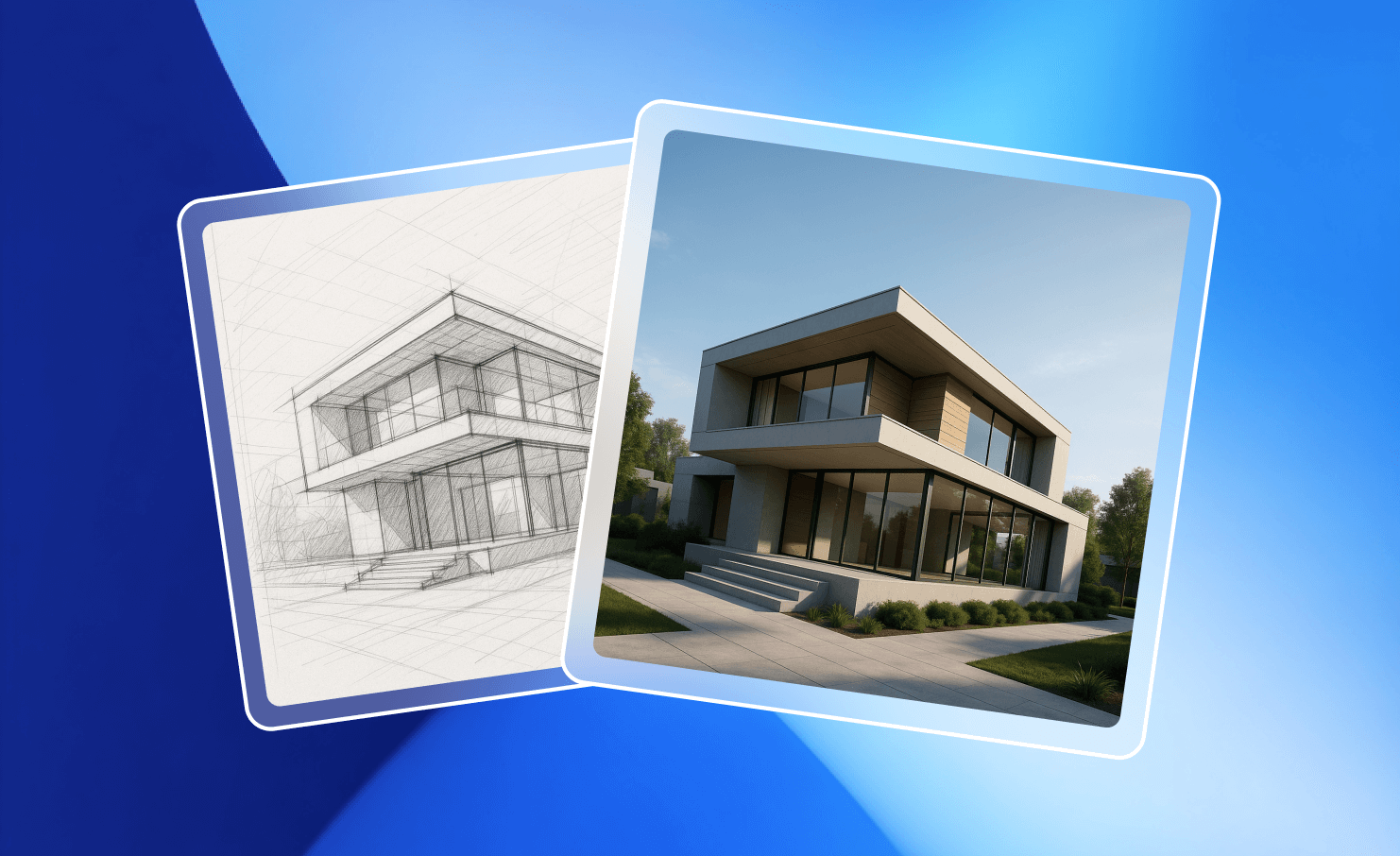

At its core, AI is designed to mimic human decision-making. To do this, it requires "Training", a process where the model is shown millions of examples to learn patterns. For instance, if you want an AI to realistically place a chair in an empty room, it must first "see" thousands of images of chairs in various settings.

This pattern-matching requires a vast amount of information. Naturally, this is where privacy concerns arise: people fear their personal data will become part of the AI's permanent "memory."

We handle your data differently. Rather than building a model from scratch, we utilize highly sophisticated, "pre-trained" models developed by industry leaders. The "heavy lifting" of education is already complete.

In our software, your data is used for Inference only. Here is what that means for your privacy:

In short: The AI has already graduated. We are simply employing its expertise to solve your problems, without ever sending your data back to school.

From the beginning, our mission has been to redefine the role of a technology company. We believe that technology should be built on a foundation of integrity, designed not to replace human effort, but to enhance our natural capabilities.

We view AI as a tool for enrichment and accessibility. By lowering the barrier to entry for complex tasks, we empower users to achieve more. Because we view the relationship between human and machine as a partnership, we have made customer privacy a non-negotiable pillar of our product design. We don’t just build software; we build tools that respect the person behind the screen.

The rapid evolution of AI does not have to come at the cost of personal or corporate security. We believe that the most powerful tools are those that users can trust implicitly. By choosing an architecture that prioritizes inference over training, we ensure that our technology remains a powerful ally to your creativity and productivity, while keeping your data strictly under your control. Our goal is to move the industry forward—not by exploiting data, but by empowering the people who use it.